A descriptive, not prescriptive, overview of current AI Alignment Research

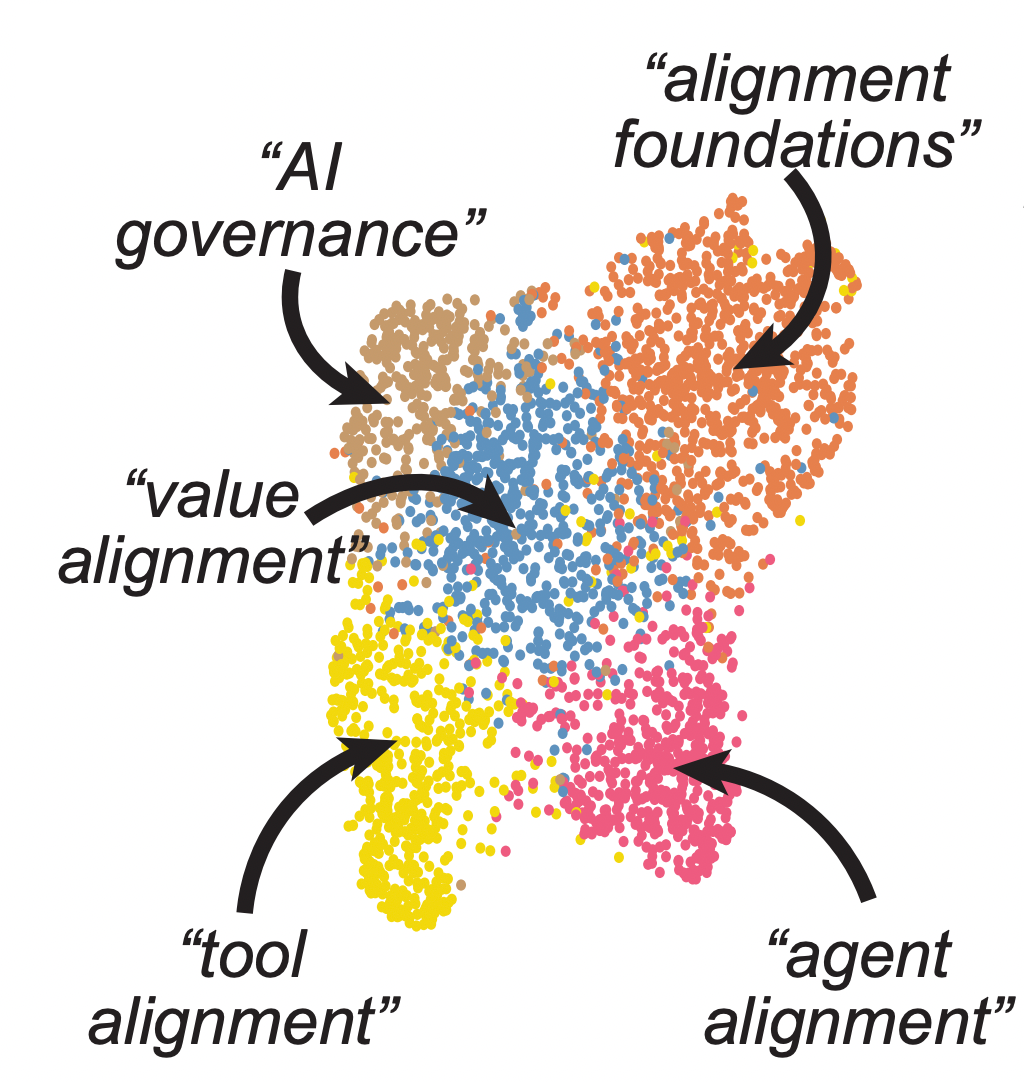

TL;DR: In this project, we collected and cataloged AI alignment research literature and analyzed the resulting dataset in an unbiased way to identify major research directions. We found that the field is growing quickly, with several subfields emerging in parallel. We looked at the subfields and identified the prominent researchers, recurring topics, and different modes of communication in each. Furthermore, we found that a classifier trained on AI alignment research articles can detect relevant articles that we did not originally include in the dataset.

(video presentation here)

This is a project I worked on during the AI Safety Camp. We wrote a paper and released a dataset.

Here's a link to the LessWrong post about this project.

This was only the first stage in this research direction. In a previous post, I shared a survey we released. We expect to make use of what we learned from the survey and speaking with alignment researchers in order to build tools to help accelerate alignment. Jan Leiki from OpenAI also thinks this direction is quite interesting. To the point that he said that it's his "favored approach to solving the alignment problem" and he mentioned our work in his latest post. I was recently awarded an LTFF to continue working in this direction, so I'll likely be working on this in late September.