Is the "Valley of Confused Abstractions" real?

Epistemic Status: Quite confused. Using this short post as a signal for discussion.

Here's a link to the LessWrong post for discussion.

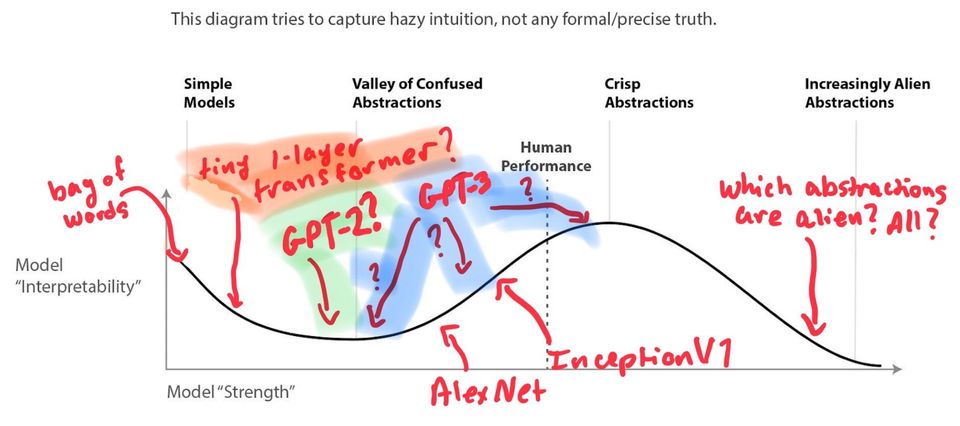

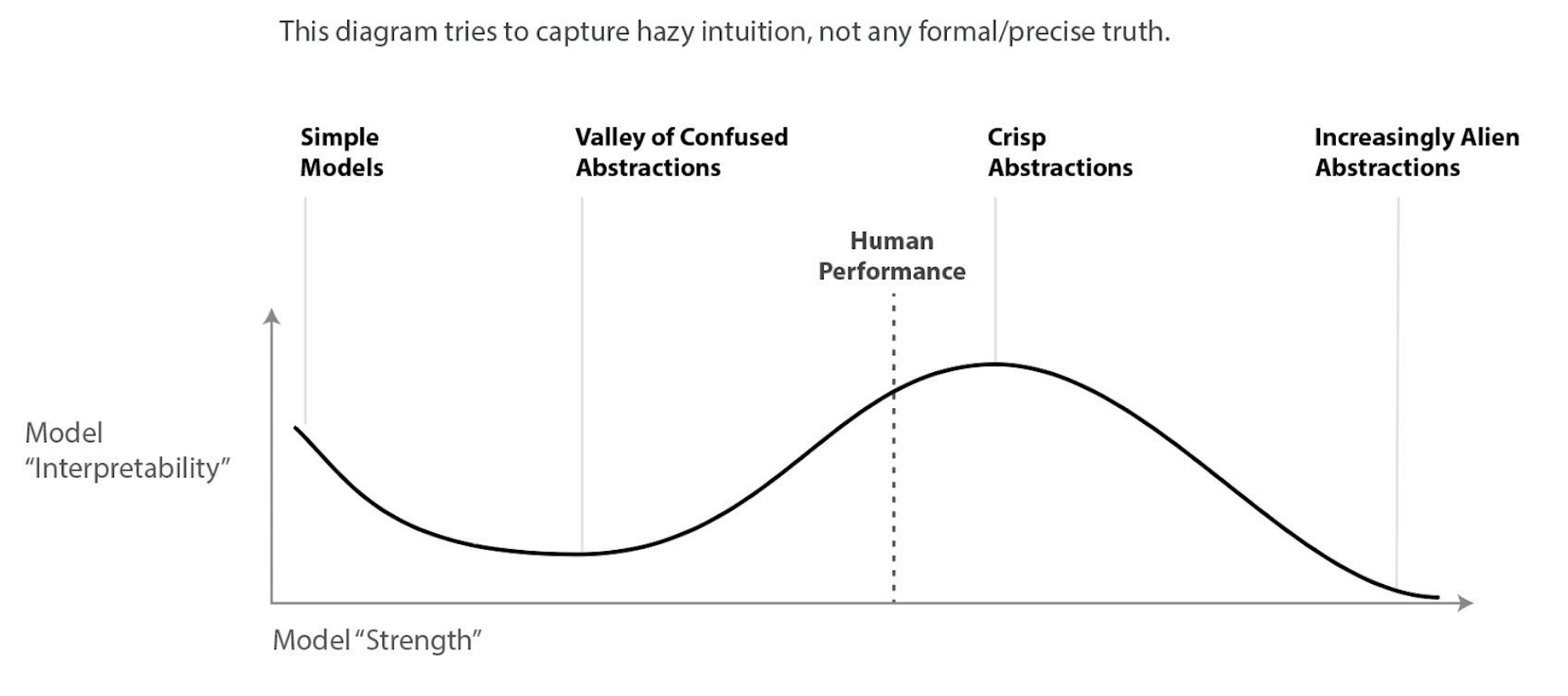

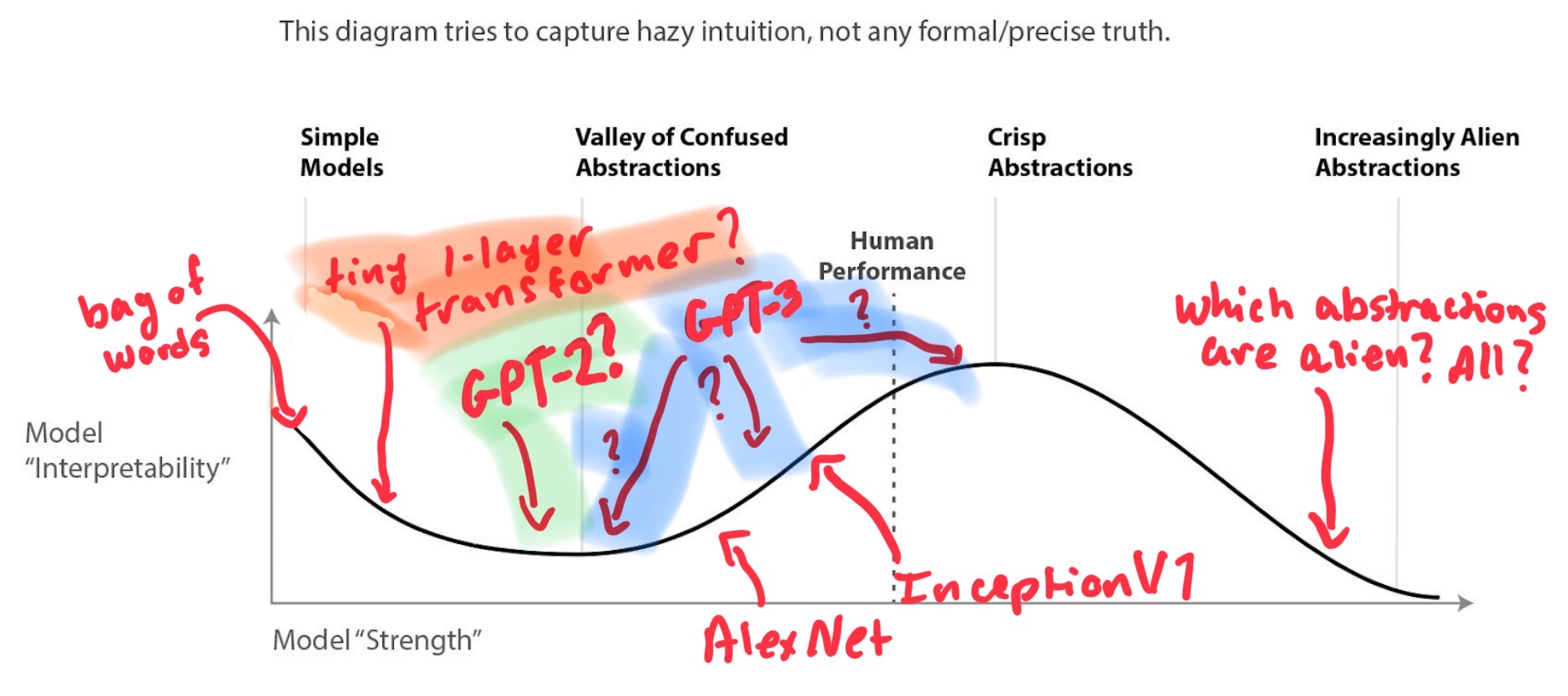

In Evan's post about Chris Olah's views on AGI safety, there is a diagram which loosely points to how Chris thinks model interpretability will be impacted at different levels of model "strength":

I always thought this diagram still held up in the LLM regime, even though it seems the diagram pointed specifically to interpretability with CNN vision models. However, I had a brief exchange with Neel Nanda about the Valley of Confused Abstractions in the context of language models, and I thought this might be a good thing to revisit.

I've been imagining that language models with the "strength" of GPT-2 are somewhere near the bottom of the Valley of Confused Abstractions, but the much bigger models are a bit further along the model strength axis (though I'm not sure where they fall). I've been thinking about this in the context of model editing or pointing/retargeting the model.

Here's my exchange with Neel:

Neel: I'm personally not actually that convinced that the valley of confused abstractions is real, at least in language. 1L transformers are easier to interpret than large ones, which is completely unlike images!

Me: Does that not fit with the model interpretability diagram from: https://www.alignmentforum.org/posts/X2i9dQQK3gETCyqh2/chris-olah-s-views-on-agi-safety#What_if_interpretability_breaks_down_as_AI_gets_more_powerful_?

Meaning that abstractions are easy enough to find with simple models, but as you scale them up you have to cross the valley of confused abstraction before you get to "crisp abstractions." Are you saying we are close to "human-level" in some domains and it's still hard to find abstractions? My assumption was that we simply have not passed the valley yet so yes larger models will be harder to interpret. Maybe I'm misunderstanding?

Neel: Oh, I just don't think that diagram is correct. Chris found that tiny image models are really hard to interpret, but we later found that tiny transformers are fairly easy.

Me: I guess this is what I'm having a hard time understanding: The diagram seems to imply that tiny (simple?) models are easy to interpret. In the example in the text, my assumption was that AlexNet was just closer to the bottom of the valley than InceptionV1. But you could have even simpler models than AlexNet that would be more interpretable but less powerful?

Neel: Ah, I think the diagram is different for image convnets and for language transformers.

My understanding was that 1-layer transformers being easy to interpret still agrees with the diagram, and current big-boy models are just not past the Valley of Confused Abstractions yet.

Ok, but if what Neel says is true, what might the diagram look like for language transformers?

I'm confused at the moment, but my thinking used to go something like this: in the case of GPT-2, it is trying to make sense of all the data it has been trained on but just isn't big enough to fully grasp the concept of "cheese" and "fromage" are essentially the same. But my expectation is that as the model gets bigger, it knows those two tokens mean the same thing, just in different languages. Maybe it does?

With that line of thinking, as model strength increases, it will help the model create crisper internal abstractions of a concept like "cheese." But then what...at some point, the model gets too powerful, and it becomes too hard to pull out the "cheese/fromage" abstraction?

Anyway, I hoped that as LLMs (trained with the transformers architecture) increase in "strength" beyond the current models, the abstractions become crisper, and it becomes much easier to identify abstractions as it gets closer to some "human-level performance." However, GPT-3 is already superhuman in some respects, so I'm unsure about how to disentangle this. I hope this post sparks some good conversation about how to de-confuse this and how the diagram should look for LLM transformers. Is it just some negative exponential with respect to model strength? Or does it humps like the original diagram?