Quantum Computing, Photonics, and Energy Bottlenecks for AGI

💡

Note: I wrote this post in less than a day and didn't want to spend more time on it, but if you have further insights or clarifications, let me know! My goal was to refresh my memory on photonic computing and better understand whether companies will consider non-GPU paths to resolve the issue of energy costs. Claude helped me write and refresh my memory on the quantum computing section.

Sign up for Aligned to Flourish

Aligning AI and Flourishing

Subscribe .nc-loop-dots-4-24-icon-o{--animation-duration:0.8s} .nc-loop-dots-4-24-icon-o *{opacity:.4;transform:scale(.75);animation:nc-loop-dots-4-anim var(--animation-duration) infinite} .nc-loop-dots-4-24-icon-o :nth-child(1){transform-origin:4px 12px;animation-delay:-.3s;animation-delay:calc(var(--animation-duration)/-2.666)} .nc-loop-dots-4-24-icon-o :nth-child(2){transform-origin:12px 12px;animation-delay:-.15s;animation-delay:calc(var(--animation-duration)/-5.333)} .nc-loop-dots-4-24-icon-o :nth-child(3){transform-origin:20px 12px} @keyframes nc-loop-dots-4-anim{0%,100%{opacity:.4;transform:scale(.75)}50%{opacity:1;transform:scale(1)}}

Email sent! Check your inbox to complete your signup.

Consider subscribing to my newsletter to get notified when I release a new post!

People sometimes ask me if I think quantum computing will impact AGI (Artificial General Intelligence) development, and my usual answer has been that it likely won't play much of a role. However, photonics likely will.

Photonics was one of the subfields I studied and worked in while I was in academia doing physics.

In the context of deep learning, Photonics for deep learning focuses on using light (photons) for efficient computation in neural networks, while quantum computing uses quantum-mechanical properties for computation.

There is some overlap between quantum computing and photonics, which can sometimes be confusing. There's even a subfield called Quantum Photonics, which merges the two. However, they are both two distinctive approaches to computing.

I'll discuss this in more detail in the next section, but OpenAI recently hired someone who, at PsiQuantum, worked on "designing a scalable and fault-tolerant photonic quantum computer."

(Optional) Quantum computing detour:

Quantum computing can be implemented using various physical systems (in addition to photonic quantum computing), including superconducting qubits, trapped ions, spin qubits, and more.

Superconductor qubits, for example, are typically fabricated on silicon or sapphire substrates using thin-film deposition (another of my past specialties!) and lithography techniques borrowed from the semiconductor industry. Often, they'll use a material like aluminum or niobium, depending on their operating temperature (the higher the superconductor transition temperature, the better), Josephson junction[1] quality, lower microwave losses (typical operating range for qubits), compatibility with substrates, etc.

For trapped ion quantum computers, you'll use atomic ions (like Ytterbium, particularly isotope Yb-171) as qubits, which are confined using electromagnetic fields in vacuum chambers.

Quantum photonics combines the two fields by using photons as the physical platform for quantum computing and communication (photons can be used as qubits, creating photonic quantum gates, and quantum communication protocols can be implemented using photons).

Overall, it's still up in the air which approach will be actually scalable in a massive-scale deep learning setting.

[1] A Josephson junction comprises two superconducting electrodes separated by a thin insulating barrier. It allows Cooper pairs (paired electrons) to tunnel through the barrier, resulting in a supercurrent that flows without resistance. The quantum behavior of the Josephson junction, governed by the phase difference between the superconducting electrodes, enables the creation of a two-level quantum system (a qubit!) and the control and manipulation of quantum information in superconducting circuits.

While quantum computing may have some applications, most people expect it to be irrelevant in the grand scheme of things. Drug discovery is often given as an application example, but Alpha Fold 3 is already a thing, so this might be another "industry application" used for getting more funding, but it never ends up panning out in practice. Most researchers in the field also expect it would be highly optimistic to imagine that quantum computers could become very useful within 10 years.

The main advantage of using photonics for deep learning is that it has the potential for high-speed, low-latency, and energy-efficient computation compared to traditional computing. Photonic devices may eventually perform matrix multiplications (obviously important for deep learning) more efficiently than electronic processors.

Now, for clarity, I am talking about photonic computing (also known as optical computing) as a broad concept, not specifically optical transistors. Someone messaged me about this and made the mistake of thinking that optical transistors and photonic computing are the exact same thing. They are not.

Optical transistors are a component that could be used for photonic computing, but they are not necessary, and companies may have a better shot at getting photonic computing to work at scale without them.

Optical transistors try to function similarly to electronic transistors but use photons instead of electrons for signal processing. It is correct to say that optical transistors are currently not that great, and it's an active area of research to get them to work.

However, photonic computing is a broader concept that may or may not involve optical transistors as some of its components. Given the limitations of current optical transistors, I understand that companies working on this typically use alternative photonic techniques to make it more feasible and practical for deep learning matrix multiplication.

Optical transistors are just not as technologically mature (and may never be) as photonic components like modulators and waveguides. For example, in the paper titled "Experimentally realized in situ backpropagation for deep learning in photonic neural networks", they do not use optical transistors. Instead, they use some of the following components: Mach-Zehnder interferometers, thermo-optic phase shifters, Photonic integrated circuits, and Silicon photonic waveguides.

The final setup allows for matrix operations for backpropagation.

As an AI governance friend of mine told me:

If you want to train or run AI systems optically, you’re probably not going to use optical transistors (which, indeed, aren’t very competitive with electronic ones). Instead, you exploit the fact that deep learning depends hugely on matrix multiplications, for which you can use specialized optical hardware (which includes things like Mach Zehnder interferometers and so forth). This paper provides more information.

Anyway, let's discuss why OpenAI hired a photonic quantum computing guy.

Is OpenAI investing in Quantum Photonic Computing?

As I mentioned earlier, OpenAI has recently (publically known as of March 5th) hired researcher Ben Bartlett, who worked at PsiQuantum.

Here's his website bio:

_I’m a currently a researcher at OpenAI working on a project which could accelerate AI training. Before my recent switch into AI, I was a quantum computer architect at PsiQuantum working to design a scalable and fault-tolerant photonic quantum computer.

I have a PhD in applied physics from Stanford University, where I designed programmable photonic computers for quantum information processing and ultra high-speed machine learning. Most of my research sits at the intersection of nanophotonics, quantum physics, and machine learning, and basically consists of me designing little race tracks for photons that trick them into doing useful computations.

During my PhD I was an AI resident at Google X, working on an undisclosed project involving electromagnetics, machine learning, and distributed computing._

One of his recent papers is titled "Experimentally realized in situ backpropagation for deep learning in photonic neural networks."

My guess is that OpenAI took a bet on this guy to see if photonic quantum computing could eventually pay off in terms of "accelerating AI training." They might not be certain it'll pay off, but it's a research bet. Note the use of the word could "accelerate AI training" in his bio. At least in this case, it seems highly related to improving optimization and making models converge faster. It's unclear how related this is to photonic quantum computing. It could be better training algorithms informed by his experiences or trying to make new kinds of infrastructure work for AI training.

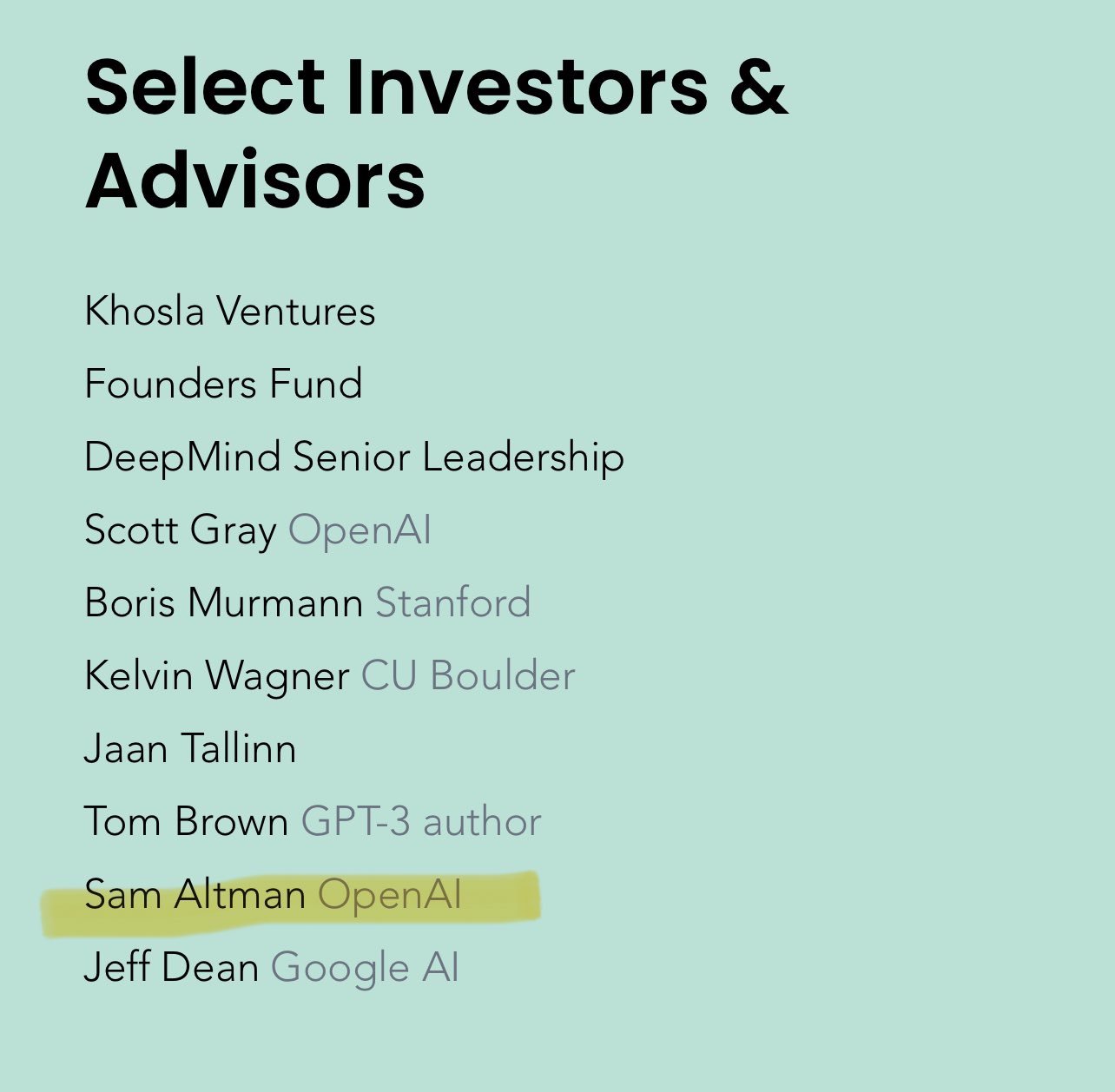

Additionally, OpenAI employees Sam Altman and Scott Gray have invested in Fathom Radiant (a fairly well-known startup in the AI Safety space), which has been working on photonic computing for a while. Tom Brown (who was at OpenAI but is now at Anthropic), "DeepMind Senior Leadership," Jeff Dean, and Jann Tallinn also invested. I don't know when they invested.

So, we may see a point where photonic computing or photonic quantum computing could play a role.

Neuromorphic chips? Photonic chips? So far, it's a long-term de-risk play.

Will TPUs impact AGI development?

I used to say TPUs were irrelevant, but I don't think this anymore. They're relevant because Google now has much of its own compute and is not as dependent on NVIDIA's GPUs. However, despite planning to create mega-data centers, a company like Microsoft is still very much dependent on NVIDIA.

This is becoming increasingly important as the race towards AGI heats up. The article "OpenAI Is Doomed - Et tu, Microsoft?" (by SemiAnalysis) highlights how having in-house AI chips like TPUs can provide a significant cost advantage in the long run. Google, Meta, and Amazon are all ramping up their custom silicon efforts, while Microsoft lags behind in this area. From the SemiAnalysis post:

In that case, Google is king given their hyper aggressive TPU buildout pace. Ironically, Google now has focus and is directing all large-scale training efforts into one combined Google Deepmind team while Microsoft is starting to lose focus by directing resources to their own internal models that compete with OpenAI.

So, while quantum computing is less likely to play a significant role, photonics and custom AI chips like TPUs still have roles to play in AGI development.

Confronting the Energy Bottleneck for AGI

Energy constraints may become a critical bottleneck for AGI development, as Zuckerberg noted in his Dwarkesh interview. As companies keep scaling models, the energy requirements for training and inference will reach insane levels. So, it's possible that companies have started thinking about energy and are thinking of ways to deal with this issue. Of course, Sam Altman has already made investments through Helion (which he then solidified a deal with Microsoft) and Oklo (which, coincidentally, just debuted on the NYSE and plunged 54%).

To put this into perspective, a 1 GW data center running at full capacity for a year would consume approximately 8.76 terawatt-hours (TWh) of electricity, which is more than the annual electricity consumption of many small countries. With Microsoft rumored to be planning a 5GW data center for OpenAI by 2030 (Microsoft also made a 10.5GW deal with Brookfield), the energy requirements could reach a staggering 43.8 TWh per year, equivalent to the annual electricity consumption of a medium-sized country like Portugal. Building new power plants and transmission lines to support these energy-intensive data centers is a long-term project that can take many years due to regulatory hurdles and land use issues.

For additional perspective, a 1 GW data center would be about 0.22% of the total U.S. electricity consumption in 2021 and 0.11% for China. This means that, let's say, five 5 GW data centers get built for training runs, which would be 5.6% for the U.S. and 2.6% for China.

💡

Here's where the numbers come from:1 GW = 1,000,000 kilowatts (kW)1 year = 365 days × 24 hours = 8,760 hoursEnergy consumed in 1 year = Power capacity (kW) × Time (hours)Energy consumed in 1 year (kWh) = 1 GW × 8,760 hours = 8,760 GWh1 TWh = 1,000,000,000 kWhEnergy consumed in 1 year (TWh) = 8,760 GWh ÷ 1,000 GWh/TWh = 8.76 TWhSo, 5 GW gives you 8.76 × 5 = 43.8 TWh

To improve energy efficiency and reduce reliance on expensive, general-purpose GPUs, Meta is investing heavily in custom silicon, such as the Meta Training and Inference Accelerator, designed specifically for AI workloads. By optimizing these chips for inference tasks, Meta can free up GPUs for training and eventually use its own silicon for training as well. However, custom silicon alone won't solve the energy bottleneck.

One way to resolve this is through Photonic chips, which have the potential to achieve much higher energy efficiency compared to traditional electronic chips. Note that photonic chips can be created through various means, and it's still unclear what will make them work well on a massive scale.

There are a few important things to know about photonic computing vs your typical GPU (these numbers are speculative, but based on my quick review, they are generally considered to be a conservative ballpark):

- Energy per operation for electronic computing (GPU): 100 fJ to 1 pJ per operation

- Energy per operation for photonic computing: 1 fJ per operation

So, if we only consider the above, photonic computing is approximately 100 times more energy-efficient than electronic computing. If you do a 1 billion dollar training run or 100 billion dollars (Dario, CEO of Anthropic, has said we'll eventually do 100 billion dollar training runs), you get a ton more flops for your dough.

However, it may be even more than the conservative 100x number. A governance researcher who works at Epoch AI pointed out to me that (they recently started looking into this too, and would really like to know if photonic computing would take off due to the energy bottlenecks):

The gains may even be larger since overall energy efficiency gains can grow larger at massive scales of training, e.g. this paper suggests an energy advantage of >100,000x over existing GPUs if you’re able to improve optical devices enough.

On the other hand we also need to account for improvements in the energy efficiency of CMOS processors. So for comparison, I think we have maybe a 50-1000x gain in FLOP/J remaining in these devices if we try really hard to optimise them.

And that's without even mentioning the cooling requirements, which are much higher for electronic computing than for photonic computing! It would be appreciated if someone wants to do a detailed Fermi estimate for the costs. My quick estimate for cooling costs is ~500 million dollars per year for a 1 GW data center.

It could even be a lot more at such a scale because a +1 GW data center would contain enormous heat. However, companies are working on making distributed training across data centers, so data centers won't be too concentrated.

For example, in Google's Gemini report:

Training Gemini Ultra used a large fleet of TPUv4 accelerators owned by Google across multiple datacenters. [...] we combine SuperPods in multiple datacenters using Google’s intra-cluster and inter-cluster network. Google’s network latencies and bandwidths are sufficient to support the commonly used synchronous training paradigm, exploiting model parallelism within superpods and data-parallelism across superpods.

One of the key questions will be: Is the additional compute worth the economic cost required to gain new capabilities, or will the cost become too great at some point?

Additionally, it may turn out that the amount of compute necessary for seed AGI (which then makes itself highly efficient and capable of running on cheap compute) may not need the level of compute that requires something like 5-10% of global GDP. At some point in the cost curve, you start to really think about spending 1 billion dollars on creating data-efficient architectures rather than 100 billion dollars on a training run.

Of course, there's a lot of speculation here (and as you'll see below, it may be more than 100x). Photonic computing is not currently used for training runs, and it would take a massive effort to scale things up that much. But it would be great to know if photonic computing could impact energy bottlenecks and whether companies are considering this. It may impact AGI timelines and governance.

Member discussion